That’s a lot of buzzwords you got there mister

I know, I know – While the amount of buzzwords in the title might be overwhelming, the simple goal of this post is to introduce users to AWS’ Elastic Beanstalk Worker Tier offering, and give a detailed tutorial on how to build and deploy an application to it.

If you don’t feel like reading the entirety of this tutorial (spoiler alert, it’s long), or want to jump straight to deploying & playing with the default app – skip ahead to the TL;DR section at the end! I promise not to judge. 😀

For this particular tutorial, I’ve chosen to Dockerize my application and back it with an RDS implementation. Dockerization provides flexibility of the application, and an RDS backend is common enough I thought it’d be valuable to put it in the default application. Unfortunately, any new RDS deployment comes with the overhead of deploying inside a VPC. So we’re stuck with that little bit of unwanted complexity.

BUT, to make it up to you all, I promise to include lots of sweet Shaq gifs to dull the VPC-induced pain. Why? Because I found out this existed the other day:

And now I need an excuse to keep searching for Shaq-related images/gifs without my ISP judging me. 😀

Additionally, the skeleton application I’ve included in this tutorial uses some other cool tech. The application itself is written in python using the Tornado framework, uses sqlalchemy to manage DB connections, and is deployed inside a Docker container using AWS Cloud Formation. Pre Requisite knowledge of python/tornado/sqlalchemy is not needed (since you can easily swap out a web server implementation inside your docker container), but some knowledge of Docker is suggested.

PreRequisites

- Git

- An AWS Account

- You can sign up for a free tier account here

- AWS CLI Installed& Configured

- You can find instructions for that here

- Nice – to – haves

- Basic Knowledge of Docker

- Basic knowledge of Cloud Formation (and other AWS Resources)

- Not entirely needed for this tutorial, but the example application we’re going to be deploying will be deployed via a provided cloud formation template

- Basic knowledge of Python & Tornado

- Again, not entirely needed for this tutorial, but the example application we’re going to be deploying is written in python + tornado

What is the Elastic Beanstalk Worker Tier (and why you should care)

Amazon’s Elastic Beanstalk Worker Tier is a subset of their Elastic Beanstalk offering. It functions much like its’ sister web application tier, but instead of directly processing web requests, uses those web requests to trigger background processes. You can deploy a worker tier alongside a web application tier, allowing you to offset the processing of long running asynch tasks to workers, or you can deploy it on its own, using it as a job framework.

The worker tier uses SQS to both trigger jobs and store failed jobs in a dead letter queue. It also includes the nifty feature of providing a crontab with your deployment to schedule periodic tasks.

Much like this strange contraption adorning Shaq’s dome,

you might be wondering why one would choose to use this particular AWS resource over the vast majority of other AWS offerings. Here’s a list of some of the top reasons you might want to consider the worker tier:

- It allows you to offset long-running asynch tasks from your main web application, giving your web app more processing power to handle incoming requests

- If you’re using it as a standalone worker system, it still comes pre-baked with self managed infrastructure (think auto scaling and provisioning), logging/alerting, DLQ functionality, and more.

- Provides a docker platform by default, and takes care of spinning up an http server (which we’ll discuss how to manage later in the tutorial) for your application to run on, so all you have to do is provide a docker image for deployment.

Our Implementation

Our particular architecture implementation will look similar to the following, super professional, obviously not hand drawn, diagram:

We’re going to be building and deploying a stand-alone worker tier. Our application will be dockerized, and deployed to EC2 instances in an auto-scaling group. The application will also be hooked up to an RDS instance running postgres. We’ll be creating both a job queue and a dead letter queue, and providing a crontab to our application to run periodic tasks. The entire system (minus the dynamo piece which we’ll cover later), will be created inside a VPC, with separate subnets for both the application and RDS instance.

Tutorial

First things first – let’s get us some code.

$ git clone https://github.com/jessicalucci/EB-Worker-RDS-VPC.git

$ cd EB-Worker-RDS-VPC

You should get a directory with the following structure:

.

├── LICENSE

├── README.md

├── deploy

│ ├── Dockerfile

│ ├── build.sh

│ └── config

│ ├── elasticbeanstalk

│ │ ├── Dockerrun.aws.json

│ │ └── cron.yaml

│ └── job-server.json

└── job_server

. ├── requirements.txt

. ├── setup.py

. └── src

. ├── __init__.py

. └── job_server

. ├── __init__.py

. ├── app.py

. ├── config.yaml

. ├── context.py

. ├── db.py

. ├── jobs.py

. └── routes.py

Everything you need to build and perform an automated deploy of the application can be found in here. Let’s walk the repository and dissect what the moving pieces are, and how they interact with each other.

There are two main areas of this repository – the application and the deployment code. Before we get into the nitty gritty of deployments, let’s actually take a look at what our app is doing.

Application

The application is a simple web app, in which we define some HTTP endpoints, and handlers for those endpoints. The worker tier operates by reading messages off our SQS queue, and posting them to a specified endpoint. When you deploy your application, you’ll specify what that endpoint is in configuration.

Routes & Handlers

Our particular application has two endpoints with two separate handlers, defined in

app.py:

The first endpoint /job/run is going to be where we’ll configure our beanstalk app to send tasks to. So, when a message is placed on our SQS queue, it’ll be read off and forwarded to this endpoint, where it’ll be picked up by our RunJobHandler:

The specifics of Tornado’s asynch/coroutine annotations are not important here. The functionality we care about is how the SQS message was translated into an HTTP request. Turns out it’s quite simple – the body of the SQS message becomes the body of the HTTP request. We can easily translate that to JSON, and parse it as needed. Here, we’re assuming that our messages contain the field job_type, which will directly map to the class name of the job we want to run.

All of our periodic tasks will post to this endpoint.

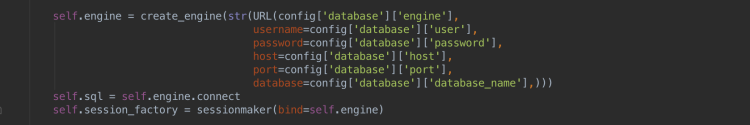

One other thing worth pointing out here (as I don’t believe it’s immediately obvious), is the database session management. If you don’t care about how this particular application operates, feel free to skip ahead to the next route description. If you do care, take a quick look at how the database connection is being initialized in context.py:

Instead of directly returning a connection object to our datastore, we’re actually sending back a session maker for each request to use. Tornado is an asynchronous web framework that doesn’t come with session management out of the box. This means we need to do our own session management, scoping our database connections to the request object. This way multiple requests can execute at the same time, each creating and managing their own sessions. If one request fails, it’ll roll back its’ specific transaction, without affecting any other currently running requests. There’s a great blog post here that describes this session management in greater detail.

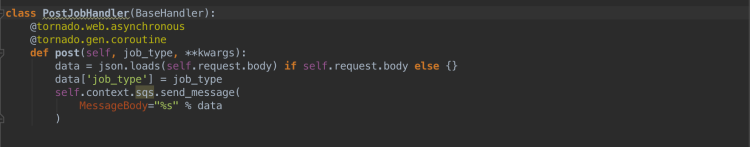

Our second specified endpoint, /job/post, is required for non-periodic tasks. If we’re not automatically triggering a job, we need an endpoint to hit that will request for that job to run!

This handler is fairly straightforward. It accepts query param, job_type, which it then posts, along with the initial request’s body, to the SQS queue. Since our application is already listening to this queue, as soon as the message posts, it’ll be picked up and processed by our original RunJobHandler.

And that’s really the extent of configuring our web application/routes! Now, let’s take a quick look at what our default jobs will be doing.

Jobs

I’ve included two very simple jobs. One, is a periodic job (which we’ll see configured in our crontab in a moment), and simply logs the time it was run:

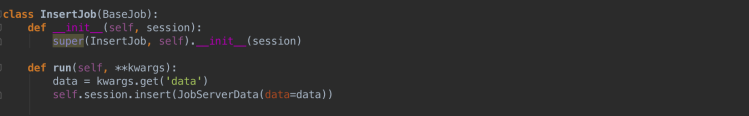

The second job will not run automatically, and will have to be triggered by a call to our second endpoint. It will insert whatever data it receives from the data key in the SQS message into the job_server_data table:

Aaand that sums up our application code. Nothing too tricky here – just some straightforward routing.

Deployment

Alright! Now we’re getting into the good stuff. But, before we dive in, here’s one more Shaq gif to give your mind a fun break.

Docker

The first thing of note in the deployment directory is the Dockerfile. There’s nothing terribly exciting going on in here – just the installation of our application and its’ dependencies.

The existence of the dockerfile is important when we look at the Dockerrun.aws.json file in the ElasticBeanstalk Direcotry

ElasticBeanstalk

Dockerrun.aws.json

The Dockerrun file specifies a set of instructions that tells elastic beanstalk how to run our Docker image. AWS gives us an option of either loading a pre-built image through the Dockerrun file (by specifying the Image key), or including a Dockerfile to be built at deploy time. While specifying a pre-existing image is arguably easier, it adds a layer of complexity concerning docker registries/authentication mechanisms that I wanted to leave out of this tutorial. So, we’ll be leaving the Image tag out, and letting EB build our Dockerfile for us.

As such, our Dockerrun.aws.json file is very simple:

All we’re doing is telling AWS what version of the Dockerrun file we’re using (Version 1 means a single container deployment), to open our container on port 8080, and to write the application logs to /var/log/job-server.

cron.yaml

This is the crontab that will be loaded onto our job servers to schedule periodic tasks. Each entry consists of three parts:

name: unique identifier for this joburl: what url should be posted to when this cron job is triggeredschedule: cron schedule for job

ebextensions

Here’s where things start to get a little more interesting. Elastic Beanstalk allows you to define a .ebextensions directory to specify files that will manage environment configuration. I won’t go into detail into all the things you can do with this directory, but if you’re interested in, you can find some documentation here.

I’ve included two configuration files in this directory for us. While they’re both fairly simple config files, they touch on some very important aspects of how elastic beanstalk works, so we’ll review both:

- 00-files.config

- One of the benefits of using EB that was mentioned earlier, was that EB spins up and maintains an HTTP server for us, so we only have to worry about programming our application. By default, this HTTP server is provided in the form of a dockerized Nginx container that sits in front of your application. One of the issues with this (which I believe has been fixed in later platform versions), is that that Nginx deployment uses the default Nginx config. This means that certain behaviors, like the acceptance of large request bodies, won’t be allowed. This file overrides some of those defaults to mirror a more production-like Nginx setup.

- 01-resources.config

- Wait – what? We’re managing Dynamo here? Where the heck did that even come from?

So, fun fact – Amazon uses dynamo to manage the crontab file/scheduling for your periodic tasks. Is this mentioned anywhere in their documentation? Nope. Have I figured out why they’re using dynamo for this yet? Nope. Have I just accepted the fact that this is part of my life now? Yep.Also turns out you can’t specify what that dynamo table will be when you create your beanstalk environment. You can only specify the table through deployment of your application, in a resource config, which is what we’re doing here.

So, fun fact – Amazon uses dynamo to manage the crontab file/scheduling for your periodic tasks. Is this mentioned anywhere in their documentation? Nope. Have I figured out why they’re using dynamo for this yet? Nope. Have I just accepted the fact that this is part of my life now? Yep.Also turns out you can’t specify what that dynamo table will be when you create your beanstalk environment. You can only specify the table through deployment of your application, in a resource config, which is what we’re doing here.

- Wait – what? We’re managing Dynamo here? Where the heck did that even come from?

Cloud Formation

One of the worst parts about deploying infrastructure is trying to automate the process. Luckily for us, Amazon has provided a tool called Cloud Formation that allows you to write infrastructure as code. This allows for repeatable, reliable deployments.

Our Cloud Formation template is defined in CODEME deploy/config/job-server.json. I don’t want to describe the entirety of the template, but I will cover some of the highlights.

IAM

The CF Template will create a single instance profile that will get assigned to each EC2 instance in our beanstalk environment. This instance profile will give our beanstalk application permission to write logs to its’ log stream (default policy attached to all beanstalk instances), permissions to read from the dynamo cron table, and permission to read/write from the job queue.

You’ll notice there are no permissions included here to allow access to the RDS instance. This is because authentication between EC2 instances and RDS instances are managed through security groups:

We simply allow ingress traffic from the EC2 (Beanstalk) security group into the RDS Security Group.

VPC

Alright, alright, alright. It’s time for…

V-V-V-V-V-V-VPC (at least that’s what I’m imagining Shaq is saying here).

As I mentioned earlier, any new generation of RDS _must_ be spun up in a VPC. This provides a little extra layer of complexity to get your application stood up, but once you understand how Amazon’s VPCs works it’s really not so bad (and it provides a great extra layer of security).

The main part of the VPC we’re creating and managing, are the VPC Subnets.

Subnets are subsets of your CIDR block, and can be public (accessible from the outside world), or private. Our cloud formation is setting up 3 separate public subnets, 2 for our RDS instance (each in a different availability zone to prevent downtime in the case of an availability zone outage), and one that contains all of the beanstalk application (both the ELBs and EC2 instances).

Because we’re putting both the ELB and EC2 instances in a single subnet, we have to include the following in our template:

that explicitly tells the vpc to associate the public ip address with our ELB. If we don’t include this snippet, any request in to our app will hang forever.

Other Resources

The rest of the pieces of our infrastructure (EB App/Env, SQS, RDS) are all managed through cloud formation as well, but once you’re familiar with cloud formation syntax, their configuration is pretty straightforward.

Again, this is just a brief overview of all the things included in the Cloud Formation template. CF does have a steep learning curve, so if you have any questions about any of the settings, I’m more than happy to provide you with answers. (:

TL;DR // Deploy All the Things

- Clone Repo

-

$ git clone https://github.com/jessicalucci/EB-Worker-RDS-VPC.git

$ cd EB-Worker-RDS-VPC

-

- EC2 Key Pair

- You’ll need to create an EC2 Key Pair (you can’t do this through cloud formation) for SSH access to your boxes.

- Once you’ve created your Key Pair, you’ll need to put its’ name into the cloud formation template under

EC2KeyPairName

- CF

- You’ll need to put your AWS Account Number into the cloud formation template at

AWSAccountNumber - Once your cloud formation template is filled out (and edited as you’d like), you’ll deploy the template via the AWS CLI:

-

$ aws --profile <PROFILE> --region <REGION> cloudformation create-stack --stack-name job-server --capabilities CAPABILITY_IAM --template-body file://deploy/config/job-server.json

-

- You’ll need to put your AWS Account Number into the cloud formation template at

- Build Application

- Once your cloud formation stack has spun up, and this will take a while thanks to RDS deploys, you’ll need to update the application config to point at your new DB Host. You can find the RDS host name in the AWS Console.

- Bundle the application for deployment. I’ve included a short script to automate the process for you. Just run:

-

$ cd deploy && ./build.sh

-

- Take the zip file generated by the build script and deploy it to your beanstalk environment via the AWS console.

- Unfortunately, at this time there’s no “one-step” command to deploy a zip file to a beanstalk environment via the command line. You have to first upload the zip file to an s3 bucket, and then deploy the s3 file to your application. This requires another set of IAM privileges for your deployment, so I figured the manual step was worth the tradeoff of reduced complexity. (:

- Test it out!

- You should start to see log output from your CronJob appear in the log stream that coordinates with your beanstalk environment name (the default will be CODEME test-logs)

- You can either post a message directly to your SQS Job Queue to trigger the

IncrementJob- You can do this by navigating to and selecting your Job Queue in the AWS Console, then clicking “Send Message” from the “Queue Actions” drop down. You’ll want to send a message with this format:

- CODEME {“job_type”: “IncrementJob”, “data”: “sendin some sweet data yo”}

- You can do this by navigating to and selecting your Job Queue in the AWS Console, then clicking “Send Message” from the “Queue Actions” drop down. You’ll want to send a message with this format:

- or you can post a request to your application at

/job/post/IncrementJobto let your application take care of scheduling the job. You’ll know it ran successfully when you see the data you’ve passed in the message written to your database instance.

And that’s it folks! If you have any questions, please feel free to comment below, or reach me through my contact page!